Do you create the actual / virtual space?

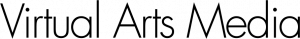

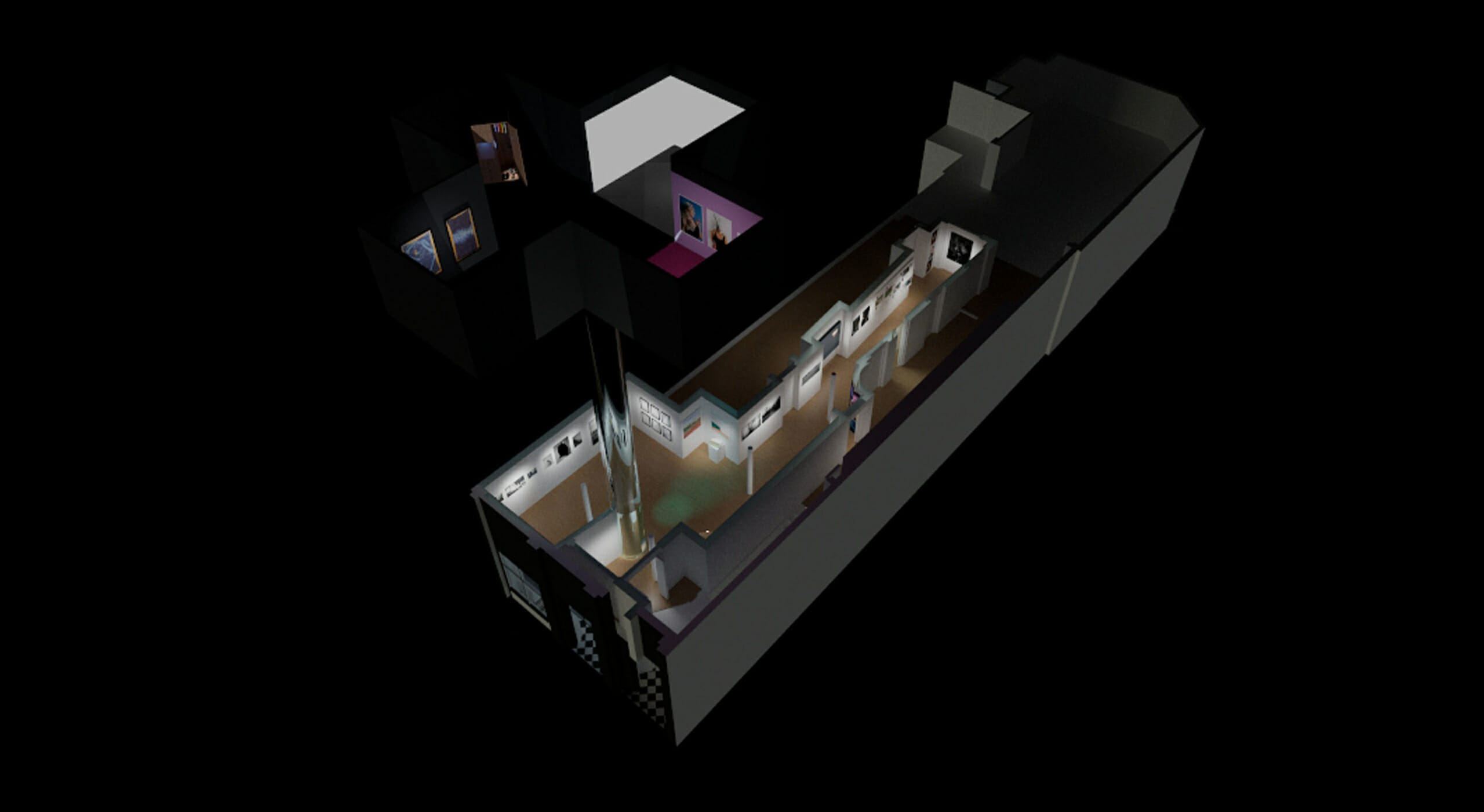

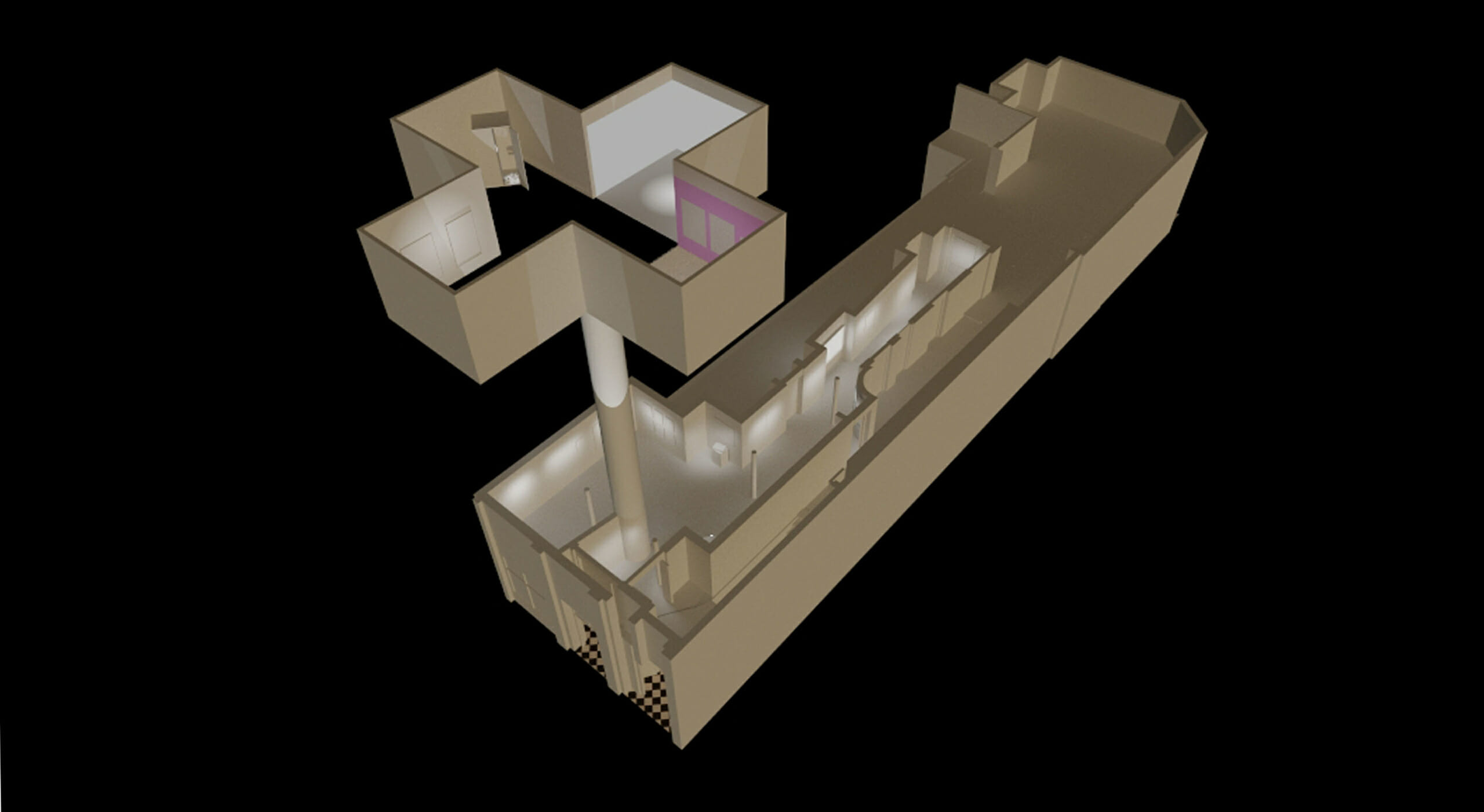

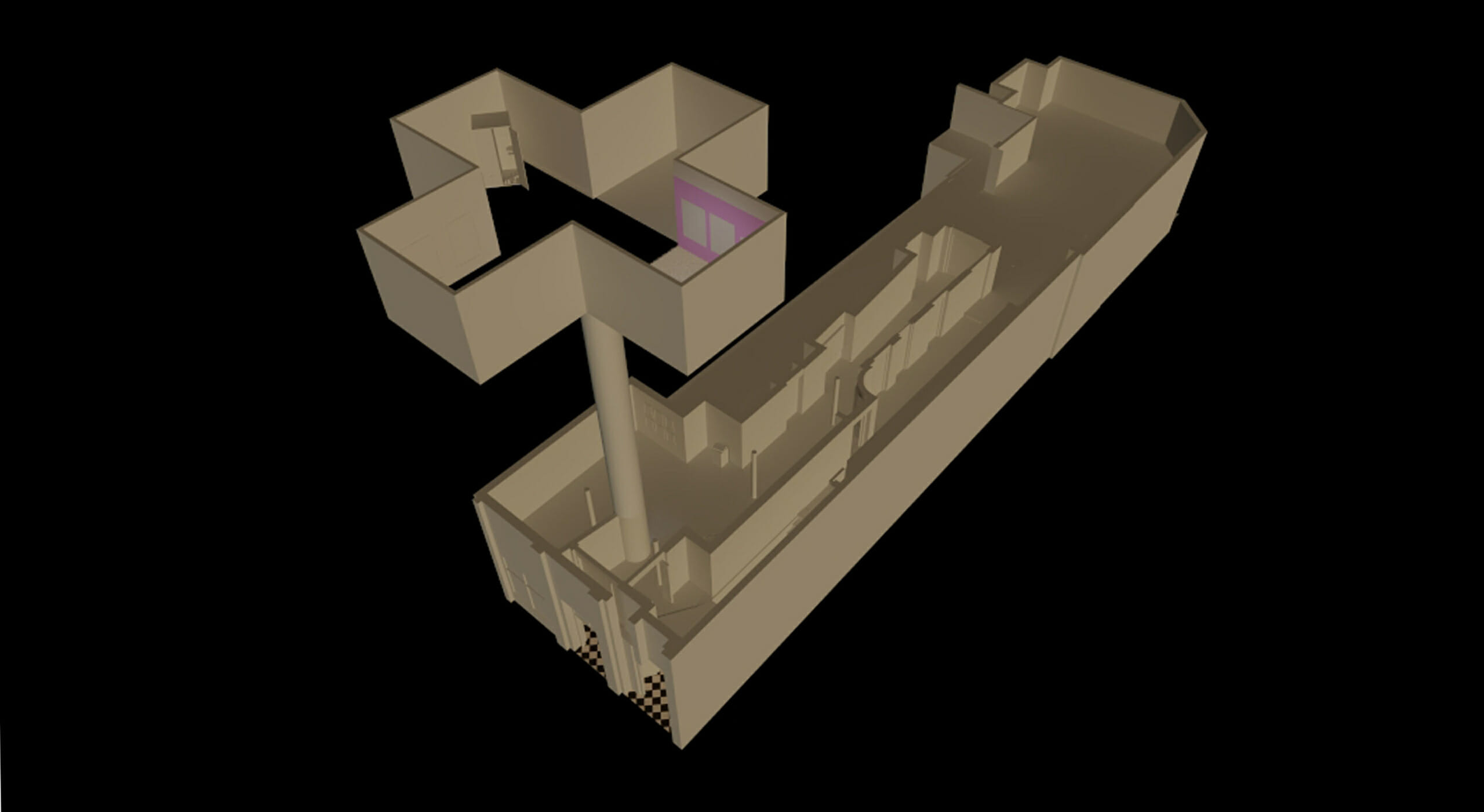

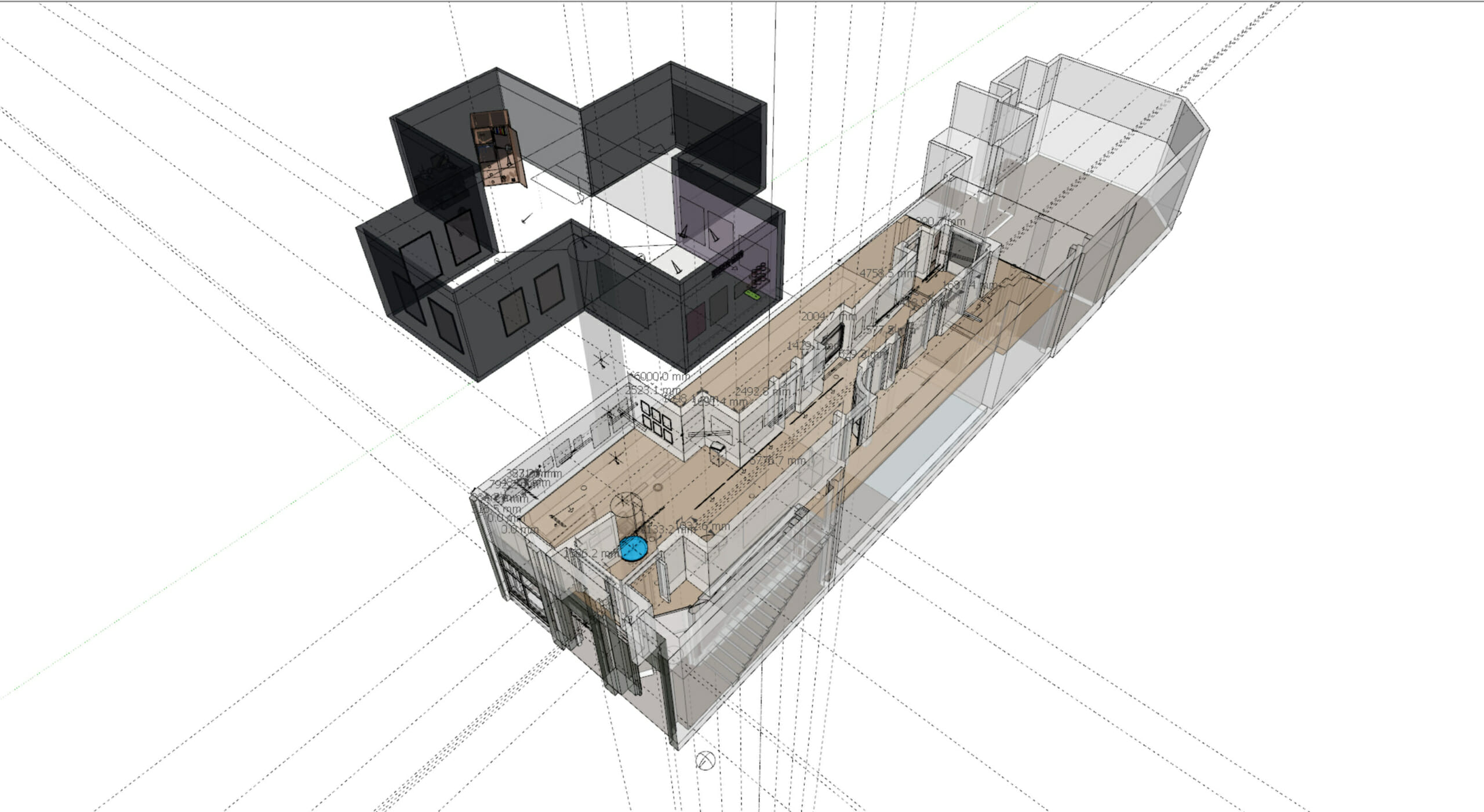

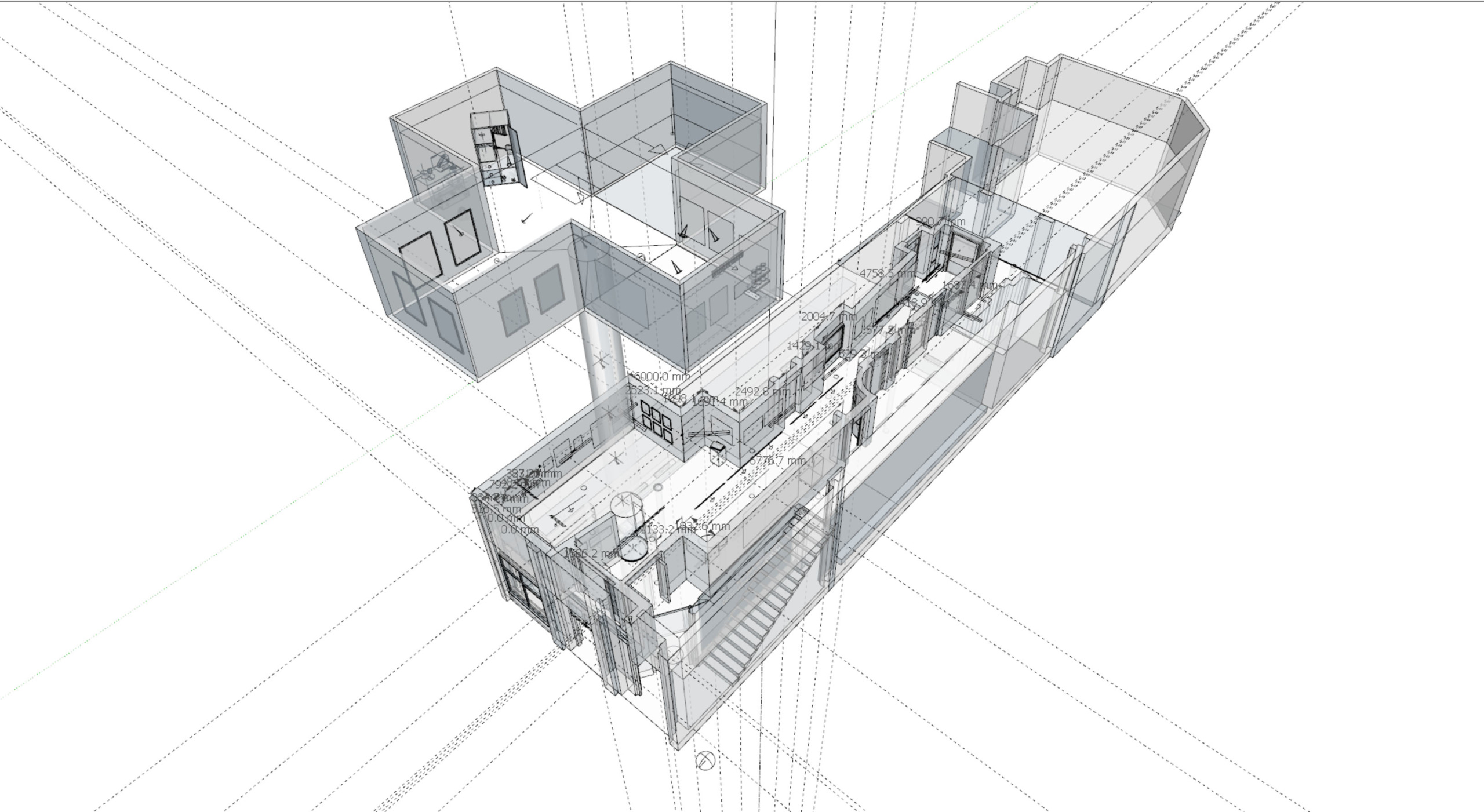

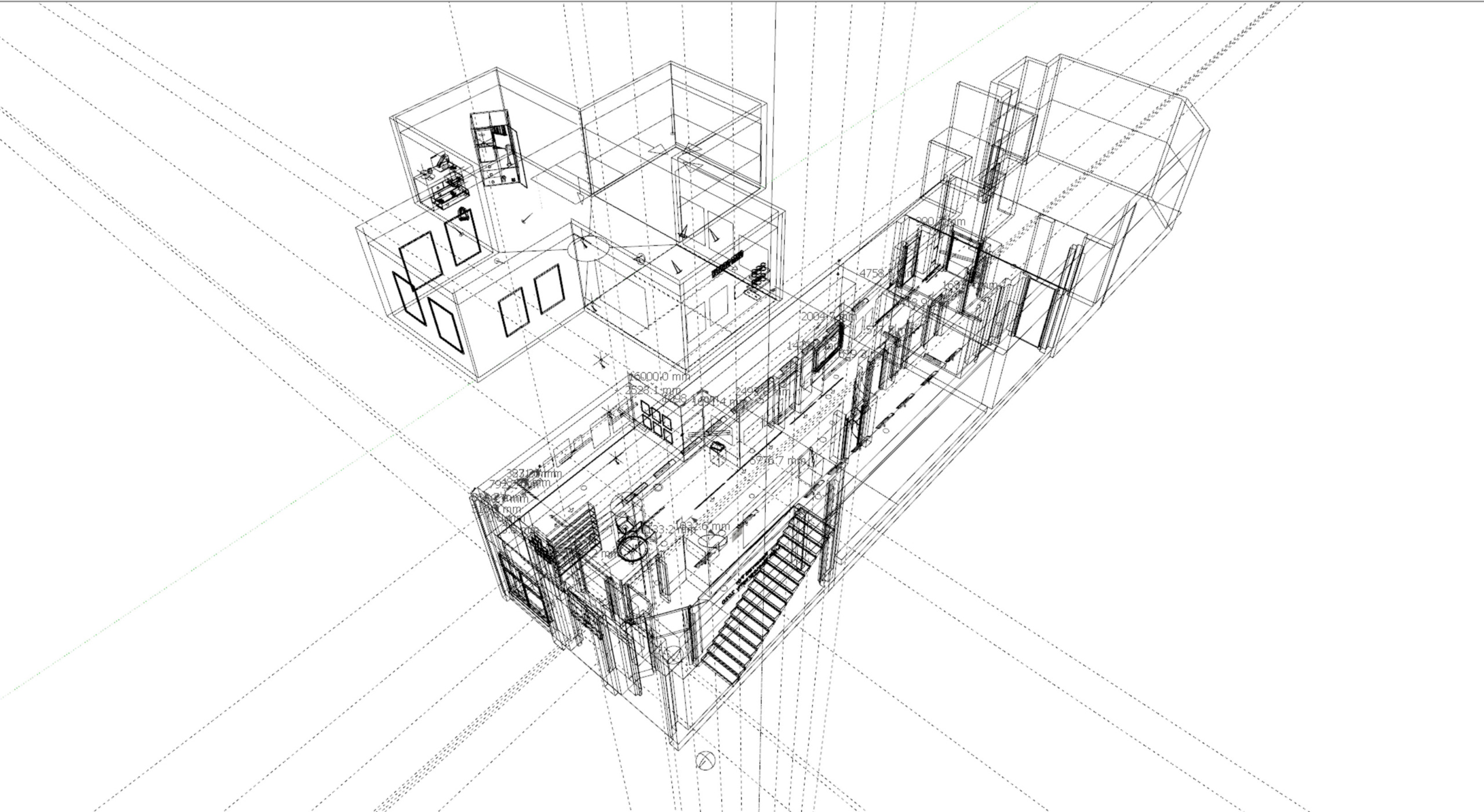

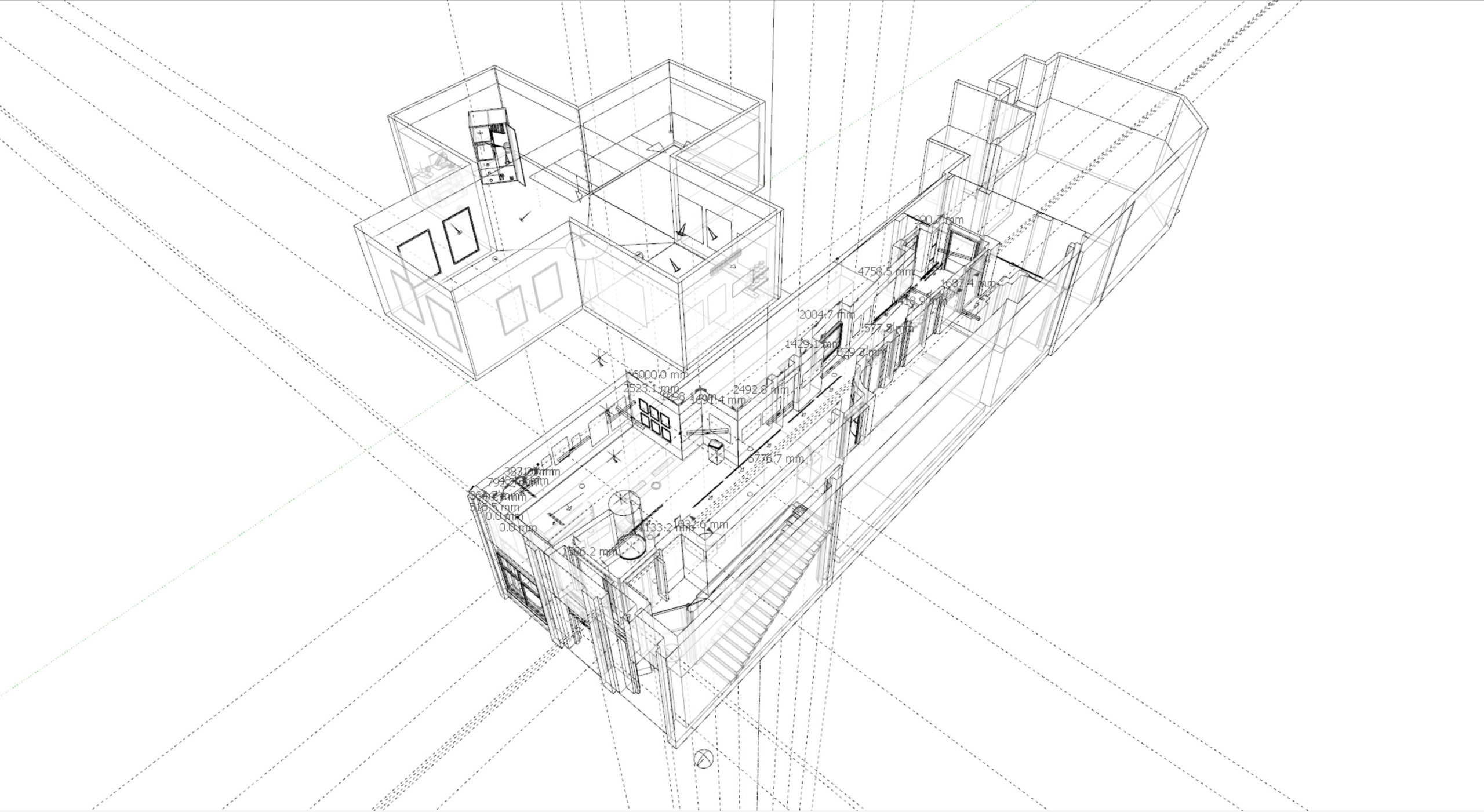

The Virtual Exhibitions are created within our own unique hand-built 3 Dimensional models of a gallery or exhibition space.

This can be a model of a real space that exists in real life or can be a space created entirely in the virtual world. For this we use 3D design and 3D rendering software such as may be found in architectural or product design environments. In the case where we have access to architectural floor plans and sections, the models can have super-accurate scale and dimensions

How do you deal with sculpture, ceramics and 3 dimensional work?

The capture of 3D work is a significantly greater challenge that capturing 2 dimensional paintings and digital media made for this environment.

To capture a sculpture for example, we use a procedure is called photogrammetry often used within the museum and heritage sector. The process requires anywhere from dozens to hundreds of high resolution photographs taken of the item taken every few degrees and from various heights.

These images are processed using Artificial Intelligence to identify and create a 3D model from of millions of tiny data points, each with texture and colour information. These are joined to create surface polygons and millions of these become the surface of the object.

This 3D photogrammetric model is enormous however, several GB. Much larger than any gallery model into which it could be placed. It therefore needs to be downscaled and converted to be inserted into the gallery model. There is a reduction in quality but the appearance remains excellent. A large show such as the RUA may have dozens of these so it’s a challenge to process and store the data plus develop the model even with our super-powerful PCs.

Whereas I can virtually frame and hang 20-30 paintings in a day, we can only manage to shoot perhaps 3 sculptures per day and then each requires several more stages of software manipulation. For certain surfaces, especially smooth surfaces hours of laborious hand-finishing are required. We really can only manage a handful of sculptural pieces at the same time and advise allowing time and budget for this part of process.

3D captures do therefore cost more to produce because of operator time and processing, however alongside appearing in the virtual exhibition and documentation it is unusual to be able to access a 3D model that the viewer can manipulate.

Should the artist wish to license limited edition reproductions, once captured the potential exists to 3D print limited edition reproduction works at whatever scale is desirable and in a variety of materials.

How do you move through the space?

Try it below…

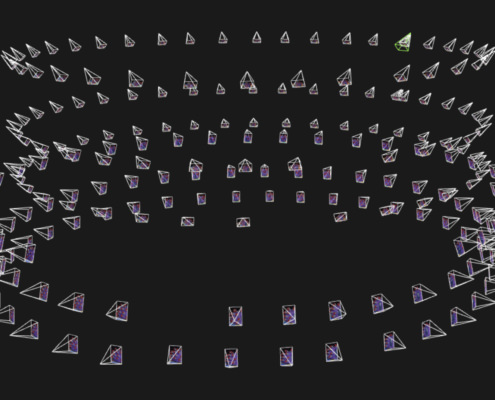

After the model is created, the works are installed, lighting and decorating is completed, can then set about creating the virtual tour through the model.

This is done by selecting a number of points in each gallery from which the work can be viewed. This might be 2-3 points in a small room, up to 50 or 100 points in huge gallery spaces. It really depends on the amount of artwork, the area of the space and the budget of the client.

More points gives a more realistic tour with greater detail of the individual works, fewer points gives a flavour of the exhibition overall but less detail.

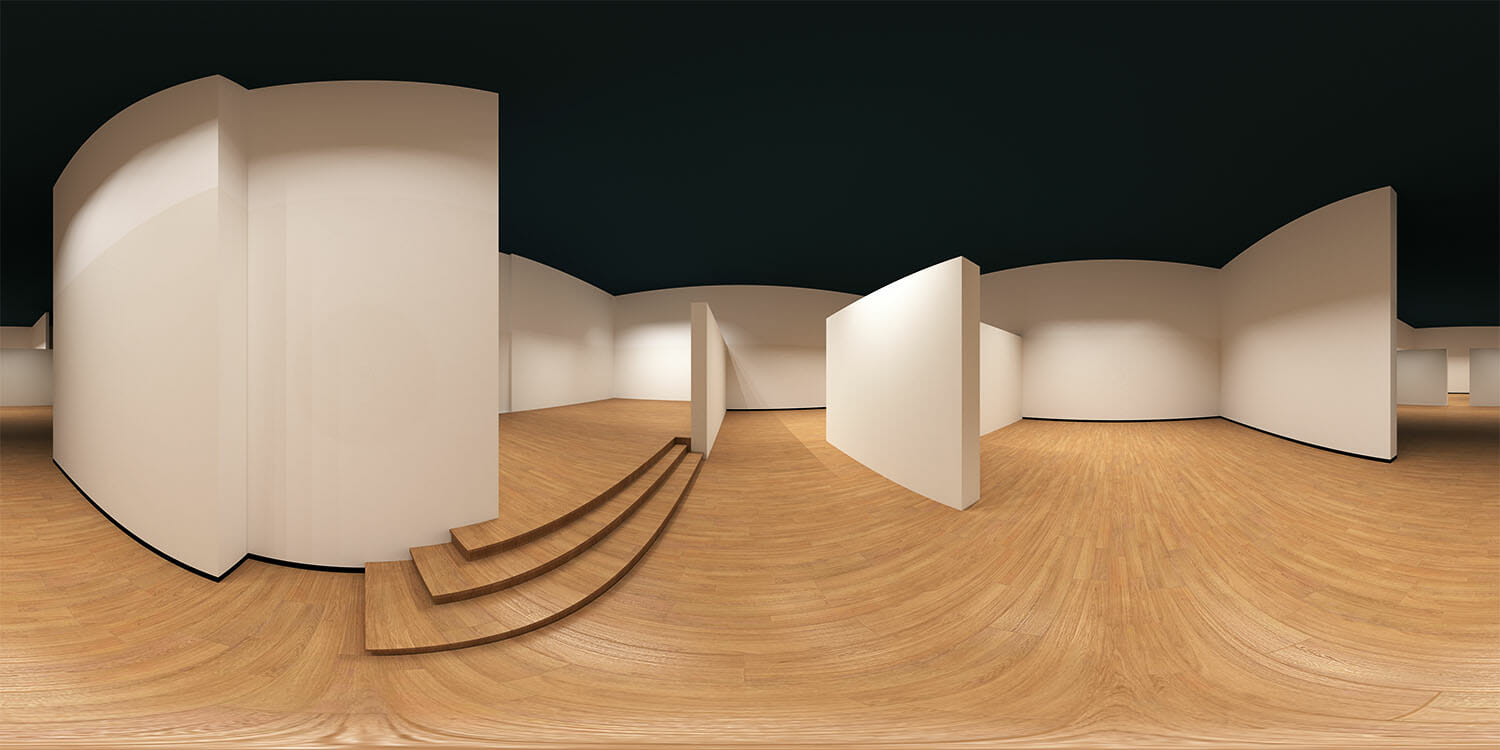

These viewing points are rendered out individually and linked together in a fashion that allows the viewer to decide which of the other viewing points in the gallery they want to go. Each point requires an individual 360 panorama from the model to be rendered and takes several hours to produce. Numbers of renders, image resolution, amount of detail and the actual time to complete the renders is a major factor to consider in the project schedule and turnaround time

Talk technical...

I first started modelling these on an 5K i7 Retina iMac with max RAM and a fast SSD hard drive. It was great for video editing however my first single panorama took 40 hours to render at full quality. That 2-day render was enough to show me that the software was amazing but that I was seriously underpowered in terms of computing and graphics processing power, like towing a JCB uphill using a mini.

Ultimately I built a fast PC from scratch. Leaning heavily on hardware that is more often seen in high end gaming and VR rigs and software from architecture and product design.

That 40hr render takes a couple of hours and eventually our own ‘render allotment’ is being planned linking several computers simultaneously to render each model.

For huge super resolution tours or jobs with a short turnaround time, we can outsource the rendering to third party render farms of servers that speed through the output, but at an additional cost.

Anyone could do it?

To a degree, yes. However as an artist, MFA graduate, art lecturer and professional arts media practitioner, the practice of creating the virtual exhibition space follows an almost identical workflow to that of a real-world exhibition curation and installation.

All aspects of the installation that support the installed work are considered, such as placing and controlling lighting, choosing floor coverings, reflectivity and paint colours, often adjusting to client specifications. We are not restricted to realistic approaches so if a client wanted a floor of grass, walls of realistic fur and a haze to show the light beams, I can do that

In our highest quality models we come closer to photo realistic images, we have detail such as the texture and reflectivity of floors, even controlling how deep are the bumps on a wall caused by using a roller. These may seem like minor details but the rendering software calculates rays and reflections of light as they interact with surface textures in order that the models respond as might real paint on a wall or real floorboards would do.

In focusing on this level of detail at our base model level and constructing with care, we strive to get to the point where the viewer is unable to ascertain the difference between the model and real life. Software, hardware, textures and materials get better every day and models will become increasingly realistic

Yes it is clear that it’s not real, but then it’s also not.

What is next?

We are only getting started!

We are starting to develop Ultra High resolution stereoscopic 3D VR versions so a viewer with a VR headset can totally immerse themselves in the art experience.

We also have exciting developments for other platforms. We have a basic prototype of our models working in Unreal, a game design engine. Using a high quality ‘gaming’ navigation these will offer freedom to move through the full model on a PC or playstation with more realistic movement and no restriction on viewing positions or proximity to the work. That’s a few months away I suspect.

360 Raw Panorama of Ulster Museum 5th Floor Gallery Set Up For RUA 2019